Facebook, Politics, and Bias: When is a Thing a Thing?

This week, Facebook founder Mark Zuckerberg testified before a joint committee hearing in Congress regarding the Cambridge Analytica data breach and what Facebook does to protect user data. During the course of the public the Tuesday sessions, some members of the House and Senate took the opportunity to criticize Facebook for perceived bias against conservatives. In some cases, it was a stretch to say the questions were even tangentially related to the purpose of the hearing. In two particular instances, I was surprised to find that the mountain was a mole hill that I was already familiar with.

This is my first in depth post on a hot topic. One of the things that I have been planning to blog about is what I have observed over the last few years being on Facebook and in the world in general. In particular what the 2016 election cycle revealed to me about people I know and how what they share and say on Facebook fits into the broader conversation taking place in American political culture. I am not planning to talk about specific people: I am just going to reflect on the growing divide I see happening in the body politic. It is time to get the conversation out of my head and onto the page.

Of course, the reflexive reaction and interpretation of the shares I saw was that it just had to be religious discrimination by Facebook. Some people did take a step back and comment that they had similar experiences, and it was always quickly resolved on appeal. When it comes of the automation of customer service algorithms are not perfect, and you have an appeal process that can fix it when a mistake is made. I have a really hard time thinking that never ever occurred to anyone at the University that the blog post would never be taken as a sign of religious discrimination, and that it was a bad idea to post that particular post on the blog without a disclaimer that no appeal had been made at that point in time.

It was never a thing that the ad rejection was spurred by discrimination. It got caught up in a echo-chamber of people who find validation in in affirmations that Facebook discriminates against conservative view points. (This is something I have observed a lot on social media, and to be fair, yes, people on the liberal side of view points have a separate echo-chamber. I just observe far more gnashing of teeth from the right.) Following the blog post being shared on social media, some news outlets ran with it as a thing, and it was made into a thing.

It is not unusual for any politician, especially those who have viewpoints that would not be benefited by a certain narrative, to ask tangential and even misleading questions in a hearing. Nor is it unheard of that they would use the time to make a statement and/or cutoff attempts at a response. (All of this happened in the clips I linked to above.) The main topic of the day was the theft of Facebook user data by Cambridge Analytica, which we can presume was used by them for their client the Trump campaign. It created yet another trust as safety issue for Facebook, and this prompted the hearing in part because that information could have been potentially used to improperly influence the election - specifically 2016. So, it is not surprising that Republicans in the committee hearing went on these kinds of tangents, even though Republicans clearly benefited from all the sharing on Facebook during the 2016 election.

This week, Facebook has provided users with some boiler plate statements about the status of their personal information and whether it was scraped by Cambridge Analytica. The fact that apps are scraping our data and that of our friends when we use them is nothing new. The issue here was that the data was taken in violation of the terms of service, and then when we checked the notice this week we also found out that our private messages, which are not supposed to get shared, were likely to have been a part of the data scrape.

There have been times when Facebook was accused of bias like when claims were made that news curators were suppressing conservative voices from the trending feed. That also happened to be at a time when the algorithm for trending was still being perfected. Then, when Facebook took the human editors off the curation job and let the algorithm do all the work of keeping the news real, a lot of right-leaning fake news stories were showing up in the top. We now know for sure that conservatives were much more likely to share fake news stories, but there were definitely plenty of stories shared on the left. You can have content accountability, but you have to accept the risks of human error and even the occasional bias.

Facebook is still a business first, and conservatives generally love a good capitalist story. It is a story of innovation, profit, and a pretty damn free marketplace for ideas. We should want to keep it free and open. There have been a lot of growing pains in using all social media the last few years as we as a society have come to realize the impact that the information shared there has had on us. Facebook gets a lot of the coverage. However, social media is not really all that different from past mediums of social communication. It is just faster - much, much faster. When people do not take two minutes and do a smell test, things get blown out of proportion and/or opposing viewpoints are dismissed as obviously fake or biased. What we have forgotten to do is listen and do self-reflection on our own biases that we bring to the conversation.

This is my first in depth post on a hot topic. One of the things that I have been planning to blog about is what I have observed over the last few years being on Facebook and in the world in general. In particular what the 2016 election cycle revealed to me about people I know and how what they share and say on Facebook fits into the broader conversation taking place in American political culture. I am not planning to talk about specific people: I am just going to reflect on the growing divide I see happening in the body politic. It is time to get the conversation out of my head and onto the page.

Whispers of Discrimination

On Good Friday, I was scrolling through my news feed and saw a friend's post that said something like "Facebook censors cross." I was curious about what prompted that friends comment, so I clicked the link and read the blog post. The short version is that the Facebook algorithm/content team had spit back an rejection of an advertisement posted by an alma mater of mine that used the San Damiano Cross, which has a painted image of the Crucifixion. The cited reason was that there was a depiction of violence. The blog post itself on the University's page did not directly imply that any discrimination was suspected, but it also did not say that there was not any suspicion of that either. Instead of saying, 'we appealed it and we are sure that there will be no issue, but the phrasing of the notice prompted the following catechetical reflection on this Easter Triduum,' the author of the blog post left it entirely open to the interpretation of the readers. (Note: According to a comment on their page, there was no plan to appeal the ad until Tuesday when the University would reopen after the holiday, but the blog post was still posted Friday.) The image of a crucifixion is a violent one, so an algorithm that is searching for terrorist imagery to remove could certainly have flagged the ad.Of course, the reflexive reaction and interpretation of the shares I saw was that it just had to be religious discrimination by Facebook. Some people did take a step back and comment that they had similar experiences, and it was always quickly resolved on appeal. When it comes of the automation of customer service algorithms are not perfect, and you have an appeal process that can fix it when a mistake is made. I have a really hard time thinking that never ever occurred to anyone at the University that the blog post would never be taken as a sign of religious discrimination, and that it was a bad idea to post that particular post on the blog without a disclaimer that no appeal had been made at that point in time.

It was never a thing that the ad rejection was spurred by discrimination. It got caught up in a echo-chamber of people who find validation in in affirmations that Facebook discriminates against conservative view points. (This is something I have observed a lot on social media, and to be fair, yes, people on the liberal side of view points have a separate echo-chamber. I just observe far more gnashing of teeth from the right.) Following the blog post being shared on social media, some news outlets ran with it as a thing, and it was made into a thing.

What Happened at the Hearing

As of this week, I had assumed the fifteen minutes of over-reaction was over, but then there were the hearings in Congress about Facebook privacy and Cambridge Analytica - something that is actually a thing. On Tuesday, Senator Ted Cruz quickly made headlines by questioning Zuckerberg about instances where it might appear that Facebook has censored conservative view points. It was a good play to his base. On Wednesday I became aware that two Congressional members asked about the blocked University ad, and in a very predictable twist, plenty of people's comments that I saw expressed skepticism that Zuckerberg was sincere in his answers or that there really was no bias.It is not unusual for any politician, especially those who have viewpoints that would not be benefited by a certain narrative, to ask tangential and even misleading questions in a hearing. Nor is it unheard of that they would use the time to make a statement and/or cutoff attempts at a response. (All of this happened in the clips I linked to above.) The main topic of the day was the theft of Facebook user data by Cambridge Analytica, which we can presume was used by them for their client the Trump campaign. It created yet another trust as safety issue for Facebook, and this prompted the hearing in part because that information could have been potentially used to improperly influence the election - specifically 2016. So, it is not surprising that Republicans in the committee hearing went on these kinds of tangents, even though Republicans clearly benefited from all the sharing on Facebook during the 2016 election.

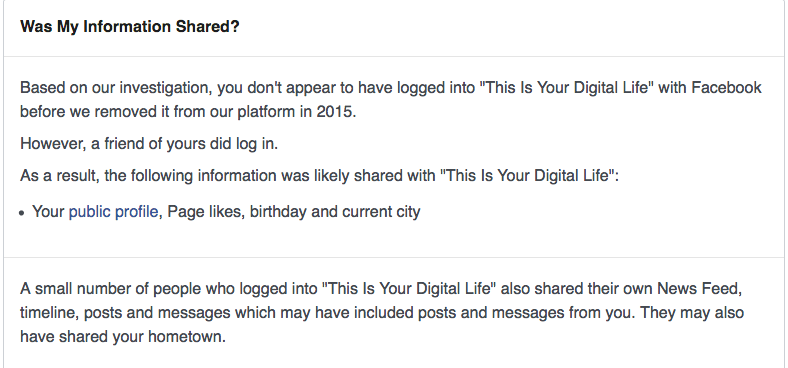

This week, Facebook has provided users with some boiler plate statements about the status of their personal information and whether it was scraped by Cambridge Analytica. The fact that apps are scraping our data and that of our friends when we use them is nothing new. The issue here was that the data was taken in violation of the terms of service, and then when we checked the notice this week we also found out that our private messages, which are not supposed to get shared, were likely to have been a part of the data scrape.

|

| My information was "likely" shared by a friend who used the CA app. Thanks you guys ;) |

Facebook, Business Decisions, and Bias

It is not news that Facebook has struggled to keep itself an open market for the exchange of ideas and social interaction even while allowing targeted advertising in order to monetize the site. Facebook users value the fact that it is free, but it is still a privately owned for profit business. The trade off for being on the site is that the information we mindlessly share there can be used to target advertising. Many third parties use that platform to reach those people who are most likely to interact with their posts. They are there to game the system to get clicks, and the system exists in order to allow them to do that. We still live in a wild wild west when it comes to laws and regulations surrounding our data, but it is out there and there are people whose job it is to crunch that data and make it useful.There have been times when Facebook was accused of bias like when claims were made that news curators were suppressing conservative voices from the trending feed. That also happened to be at a time when the algorithm for trending was still being perfected. Then, when Facebook took the human editors off the curation job and let the algorithm do all the work of keeping the news real, a lot of right-leaning fake news stories were showing up in the top. We now know for sure that conservatives were much more likely to share fake news stories, but there were definitely plenty of stories shared on the left. You can have content accountability, but you have to accept the risks of human error and even the occasional bias.

Facebook is still a business first, and conservatives generally love a good capitalist story. It is a story of innovation, profit, and a pretty damn free marketplace for ideas. We should want to keep it free and open. There have been a lot of growing pains in using all social media the last few years as we as a society have come to realize the impact that the information shared there has had on us. Facebook gets a lot of the coverage. However, social media is not really all that different from past mediums of social communication. It is just faster - much, much faster. When people do not take two minutes and do a smell test, things get blown out of proportion and/or opposing viewpoints are dismissed as obviously fake or biased. What we have forgotten to do is listen and do self-reflection on our own biases that we bring to the conversation.

***

For further reading on the impact of echo-chambers and epistemic bubbles on American society, I highly recommend this piece at Aeon.

Comments

Post a Comment